As a .NET developer having been dabbling in the Salesforce platform recently, I was quite (happily) surprised to find out that Salesforce forces you to write unit-tests for any custom code that you develop on its platform! You must have a code coverage of 75% or more to deploy any Apex code into production or package up any code for distribution on AppExchange – Salesforce’s community marketplace. While I suppose that developers can find ways to beat the system by writing meaningless tests that get them over the 75% mark, I’m glad that Unit Testing is at least “a thing” in this ecosystem and something that a developer on this platform gets introduced to, from early stages.

Unfortunately, this is not the case in many other platforms, even in my beloved .NET world. “Unit Testing” or more generally – automated testing is often “out of scope” for most tutorials and introductory courses on the platform and as such, it’s not a practice that is often introduced to new developers in the ecosystem. And unlike Salesforce which is a SAAS offering, since .NET runs pretty much everywhere from Raspberry Pis to enterprise grade servers and everything in between, there is nothing much that the framework can enforce in terms of having a set of automated tests or a certain percentage of code coverage, like the Salesforce folks do. Many smaller organizations do not have any codified standards that address unit-testing at a concrete level and as such, automated tests are pretty much non-existent in a lot of codebases. Then there are well-meaning developers who have learned at some level that automated testing provides a lot of value and try to introduce them into their workflow but soon find themselves in an uphill battle trying to put it into practice. They may hit resistance from the rest of the team and struggle with the lack of adoption. Or they may not know how to write good unit tests and instead of the practice making their lives easier, they find themselves fighting with their tests and eventually give up in frustration. Or they may struggling over picking the right framework. Or tools. Or how to integrate them into their existing CI/CD structures.

Let’s try to address some of these issues.

Over the course of these next few posts, I hope to provide a good jumpstart for automated testing, hopefully enabling you to adopt this good practice to your own development projects. As a .NET Developer, the tools and patterns that I’ll demonstrate may not match your tech stack exactly but hopefully, you can at least walk away with some general guidelines and patterns that you can adapt to your own specific scenario. But first, as this an introductory 101 level post, lets answer some of the basic questions surrounding the domain of automated testing.

What is Automated Testing?

I was struggling to come up with a succinct, elemental definition for this term. At the most basic level, Automated Testing is the practice of writing test code and/or setting up automation to exercise the functionality of the software.

However, the domain of Automated Testing goes far beyond this basic tenant.

Firstly, we must recognize that there are different types of automated tests – Unit Testing, Integration Testing, End-to-End Testing, Smoke Testing, Load Testing, Black Box Testing, White Box Testing, and many more.

Secondly, there is the whole world of testing tools and frameworks. While you can cobble together your own scripts and tools to “automatically test” your software, most software languages and frameworks out there have corresponding well-established and recognized testing frameworks and tools to address your testing concerns. There are frameworks and tools solely concentrating on establishing “stand-ins” to represent (or mock) a portion of the software for the purposes of testing another portion. There are frameworks and tools solely concentrating on the readability of the tests. There are test runners out there whose sole responsibility is to execute the tests that you write and provide results. There are code-coverage tools that report on what portions of your code base and what percentage of it is covered by an automated test, presumably to draw your attention to areas of your codebase that can be vulnerable to an unintended failure.

Another aspect of this is the automating the execution of these tests, themselves. Usually they are injected into CI/CD pipelines so that tests suites are run whenever a new piece of code is promoted to an environment and the build is “failed” disallowing it from being promoted when an underlying test fails. This allows the developer to fix the issue prior to moving that code up, once again.

Why Write Automated Tests?

You may have already answered this question to some degree, but it is good to be on the same page as to why we want to do automated testing. Or perhaps, you are looking for some concrete points that you can use to vocalize this to your management or your team members.

Manual Testing Doesn’t Scale

I’ve been on teams where you develop a feature, release it to a testing environment and then tag a tester to test out that feature. While such a practice may work in the beginning of a project or product, it may not work in the long run. When your software has a limited set of features, asking someone to “take a look” and give you “the green light” is perhaps doable. But as the complexity of your software grows and as you have more and more interlocking pieces, such manual testing becomes impractical. Especially, regression testing. Your new change may affect a seemingly unrelated aspect of your software due to some dependency and now the tester will have to test and confirm the software in its entirety to ensure that your update didn’t have an adverse impact elsewhere.

However, I’d also like to add that manual testing still has a place in software development. You’ll want your critical paths tested and certified by a human tester for a variety of reasons. You may find that there were some aspects of a feature that were not adequately captured in an automated test. There may be features that may pass on paper but may still have other issues in terms of user friendliness and the overall usability of the software. There is still room for things to slip through the cracks and you’ll want to augment your automated tests with some level of manual testing. There maybe insights that a human tester can draw from their interactions with the software that will not come through in an automated test. However, I’m of the opinion that automated tests should cover a majority of your scenarios and manual testing should be few and far between. We’ll revisit this point when we look at the Testing Pyramid, a bit later.

Automated Tests are Quick(er)

Automated tests, especially unit tests generally run in sub-second times. With the ability to run tests in parallel, you can often run tens or even hundreds of tests in a matter of seconds. Even when you consider integration tests with databases and data persistence concerns, you can still run them in record time compared to testing the same features manually.

Automate Tests are Reliable

Generally speaking, automated tests are reliable. Sure, you can still write flakey tests that give you inconsistent results if you are writing them using a bad testing framework or doing something on incorrect assumptions or not accounting for network latency or other external factors but generally speaking, they provide a lot more reliability than manual tests. With automated tests, you can execute them over and over again without ever missing a step. Or mistyping something during a test. They’ll run exactly like how you programmed it. On the other hand, a human tester can miss a step or do a step incorrectly causing a false positive or a false negative.

They Document Your Codebase

Automated Tests have a good side-effect of documenting your codebase. When you’re looking at someone else’s code or looking at an existing codebase, you can look at the unit-tests in the project to quickly get an understanding of what the software is doing. They usually read much better than just looking at code, alone.

The Principle of Compound Interest

The test you write today against a small, seemingly insignificant portion of the codebase not only benefits the testing of that isolated piece of code, it helps all future development done on that project. You’re adding to a library of tests that represents the overall health of that codebase. The next developer that’s working on the next feature can write that feature with more confidence now because they can rely on your test to confirm that their new addition has not caused an unintended regression on something that you made, previously.

Be Confident, Be Happy

We’ve all been there. We join a new team or a new organization and you find yourself responsible for the upkeep of a piece of software that has been around for many years. The folks who wrote the system are now long gone and you don’t have the luxury of asking them how something works or can’t rely on them to jump in and fix something in a time-crunch. You are it. How about adding a new feature or changing an existing one? We’ve all sensed that fear and trepidation of unintentionally breaking something, especially when the entire business relies on that software! You are afraid to touch it because while you have a rough idea on what the software is doing, you really don’t know exactly how something works and you don’t want to be that guy who brought down the business to a grinding halt!

Automated tests provide you the guard-rails that you need in such a scenario. You can make changes unimpeded because you can run those unit-tests to confirm that they all light up green – still pass like it did prior to your modification. You can proceed with some degree of confidence that you have not caused some unintended harm with the change you made.

The Testing Pyramid

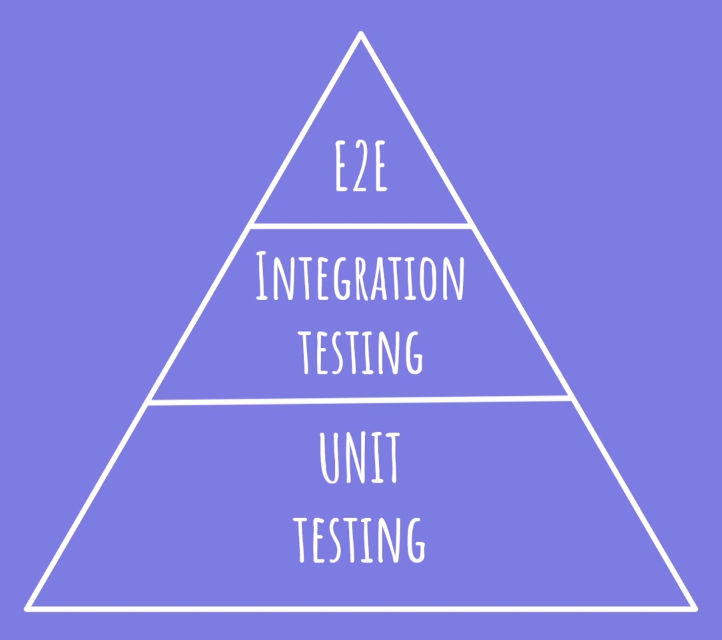

You may have come across this below (or a variation of it), in your research on automated testing:

The Testing Pyramid gives you a rule-of-thumb, a frame of reference as to what the composition of your automated-testing suite should look like. The diagram is indicating that you should strive to have a lot more unit tests in your setup (as that takes up the biggest portion at the base of the pyramid) and you should spend fewer resources on creating and maintaining end-to-end (E2E) tests (as indicated by its small surface area at the top of the pyramid). And yes, this is not a hard-and-fast rule and you’ll find developers and architects at all ends of the spectrum advocating how you should spend more time on end-to-end tests as they closely represent the true health of the software as it mimics human interactions or how you should put most of your efforts on integration tests as they provide a good middle ground. I personally try not to get caught up in dogma and instead try to have a pragmatic approach to all things development, including my efforts in automated testing. At some level, I think we all agree that automated tests provide a set of benefits that we can’t replicate with manual testing alone.

The three major types of automated tests are shown in the pyramid above and are described below.

End-to-End (E2E) Tests

These are generally the tests that closely match what we do, as humans, in terms of manual testing. When we do manual tests, we are opening up the application, perhaps entering some data and confirming that the results match our expectations. Similarly, end-to-end tests do this in some sort of automated fashion. In the world of web application development where your application lives in the context of an internet browser, end-to-end tests automate the process of opening up browser windows, entering URLs and waiting for the page to load, automation of clicks and entering of text and other interactions that mimic our own behaviors in interacting with the application. There are frameworks out there that use the same engines that popular browsers use (e.g., Microsoft Edge, Google Chrome, Mozilla Firefox, etc.), interacting with those browser engines in an automated fashion and capturing results. Cypress.io and Selenium are two well-known players in this space.

However, as indicated by the Testing Pyramid, these tests are considered “expensive” tests. They take longer time to develop and take more effort in maintenance. Relatively speaking, they run slower. Since it generally mimics end-user behavior, efforts must be made to address stage management – setup and clean up of data in databases, for instance. They can be brittle as there are many pieces involved, each piece which can introduce latency and other variables, potentially causing false-positives while testing. As a rule-of-thumb, we should minimize our reliance on these and perhaps only setup a few of these to test out the most critical paths/operations of our software.

Integration Tests

Many argue that these are the best tests to write. Integration tests take a sub-section of the software and tests those as a unit. If that piece involves a web-server, a connection to a database, all those pieces are accounted for in the test so that it can test the feature from “front to back” providing a more realistic picture of the health of the software.

Unit Tests

In the Testing Pyramid, these types of tests take the most real-estate and prominence. Relatively speaking, they are easier to write and execute as they test the most basic and discrete units of the software – something usually as small as a single method in a single class. Since they deal with such a small surface area, they are generally very quick to run and can be executed during development time without having to bootstrap databases or third-party services.

Closing Remarks

This was just an introductory post in a new series of articles that I plan to publish in the coming weeks. While we looked at Automated Testing at high level in this post, I plan to look at some concrete examples in the coming episodes. My hope is to introduce specific frameworks, demo specific tools and using those, give you some concrete examples of automated testing that you can then apply into your own stack and situations.